Providing impartial insights and news on defence, focusing on actionable opportunities.

We're sorry, but we couldn't find any results that match your search criteria. Please try again with different keywords or filters.

Loading

-

It comes as UK Space Command seeks to become “a more intelligent customer”.

-

Startups and small businesses are eligible to apply.

-

Interested parties have a limited time to apply.

-

The ‘loyal wingman’ uncrewed systems will leverage AI for complex battlefield situations.

-

The fund specifically targets small, UK-owned companies that are new to defence.

-

The system could revolutionise tactical operations.

-

The marketplace follows the establishment of the new small C-UAS JIATF-401 taskforce last year.

-

The new office seeks to cut red tape and accelerate procurement with small companies.

-

The plan aims to build out the US Navy’s lethality, complementing the new Golden Fleet initiative.

-

While the ‘as-a-service’ model is common for software procurement, militaries are beginning to explore this approach to acquiring other military capabilities.

-

- News

- Europe

- Tech

UK launches call for C-UAS solutions

Participation is a minimum requirement for those looking to win UK Home Office C-UAS contracts. -

The announcements come amid the country’s Society and Defence Annual Conference in Sälen.

-

- News

- Europe

- Space

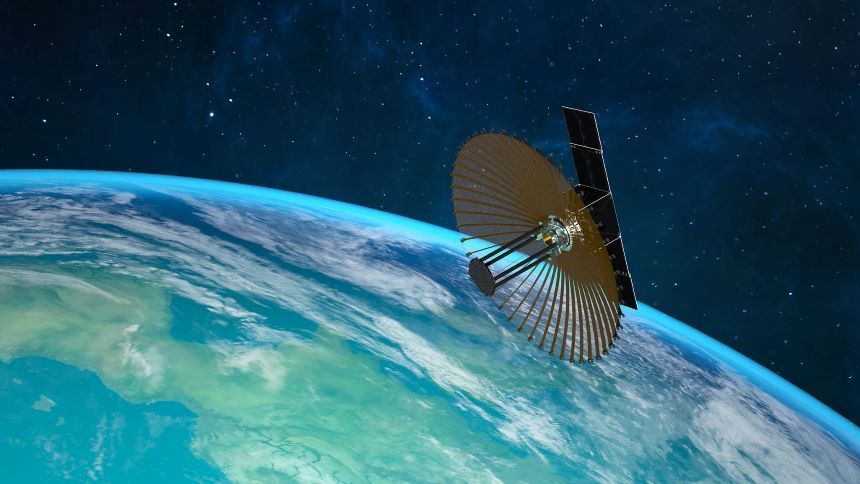

UK companies launch satellite with innovative new antenna

UK SME, Oxford Space Systems, has deployed its inaugural Wrapped Rib Antenna in space. -

- News

- Europe

- Investment

UK to develop new ballistic missiles for Ukraine

Up to three contracts are expected to be awarded. -

- News

- Europe

- Investment

UK military faces challenges amid budget constraints, defence chief warns

Difficult decisions will have to be made.

273 Results